Live Video Processing

One of the main features of the SmartFace Platform is video processing and it’s analytics. SmartFace can process either live video streams called Live Video Processing or recorded video footage called Rapid Video Processing.

With Live Video Processing we can process multiple parallel video streams in real-time from various video sources. This functionality represents a continuous process that operates from the moment it is enabled until it is manually stopped. During video stream processing, SmartFace handles various types of events and promptly sends underlying notifications in response to these events.

Sources

SmartFace Platform is hardware-independent and can process videos from various sources:

- IP camera

- Video management system (VMS)

- Networked video recorder (NVR)

- USB camera

- Laptop’s onboard cam

- Edge devices

- static video file recursively looped

Please note

- The IP cameras, VMS and NVR must provide a Real Time Streaming Protocol (RTSP) stream which is then processed by SmartFace.

- Edge devices must be running the SmartFace Embedded Stream Processor.

- Static video files as well as the USB or webcams are available for demonstrations or exhibition purposes only.

Each video source is then handled by the SmartFace Platform as a Camera or an Edge Stream and each video source must be individually registered to the SmartFace either as a Camera or Edge Stream.

Example: If you have a facility with 10 IP cameras you want to connect to SmartFace, 10 Cameras must be registered in the SmartFace Platform

Camera

The Camera is a SmartFace Platform entity that represents a live video source that is processed on a server side, in most cases an IP camera that produces an RTSP stream. You can add, manage and delete Cameras via SmartFace Station or programmatically via SmartFace REST API. Every Camera has its configuration, enabled or disabled features and is processed in a separate service.

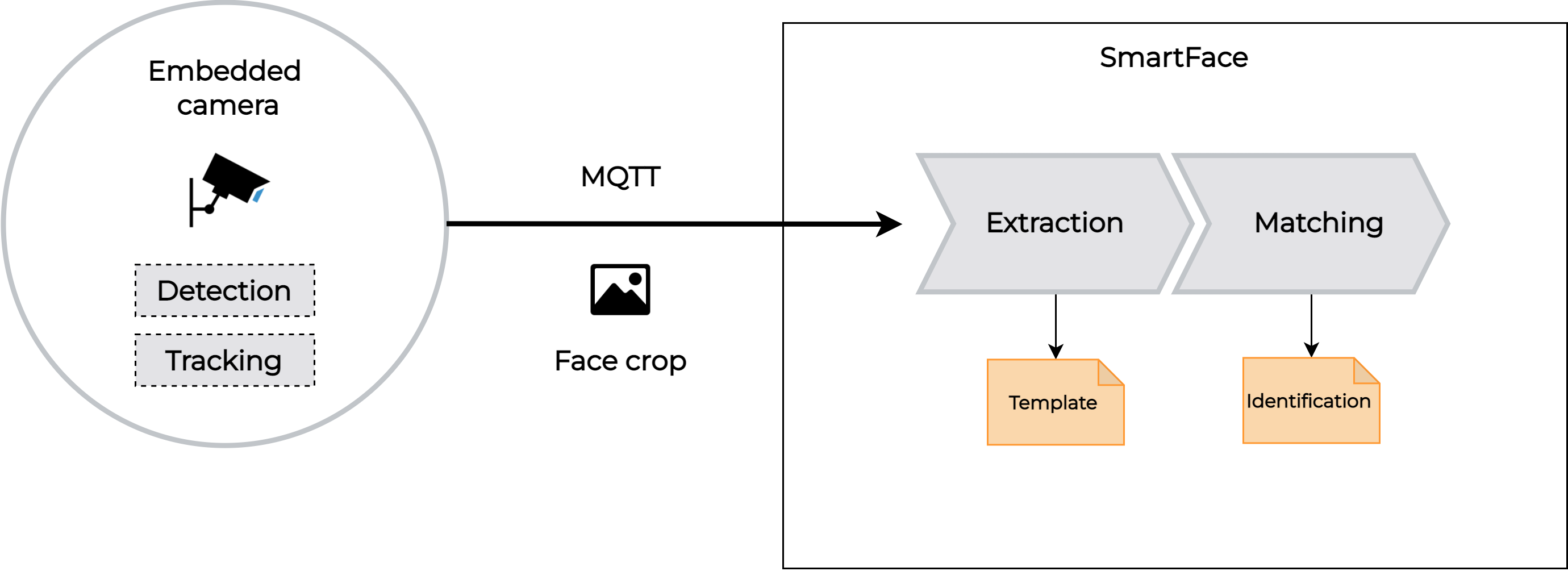

Edge Stream

Edge Stream represents a live video source that is processed on the edge, in most cases an IP camera with hardware accelerated processing that produces MQTT messages that are sent to SmartFace Platform. You can add, manage and delete Edge Stream via SmartFace Station or programmatically via SmartFace REST API. Every Edge Stream has its configuration, enabled or disabled features and is processed in a shared service.

| Camera | Edge Stream | |

|---|---|---|

| video decoding | on the server side | on the edge |

| input to SmartFace Platform | video stream | metadata only |

| processing | separate service | shared service |

Pipeline

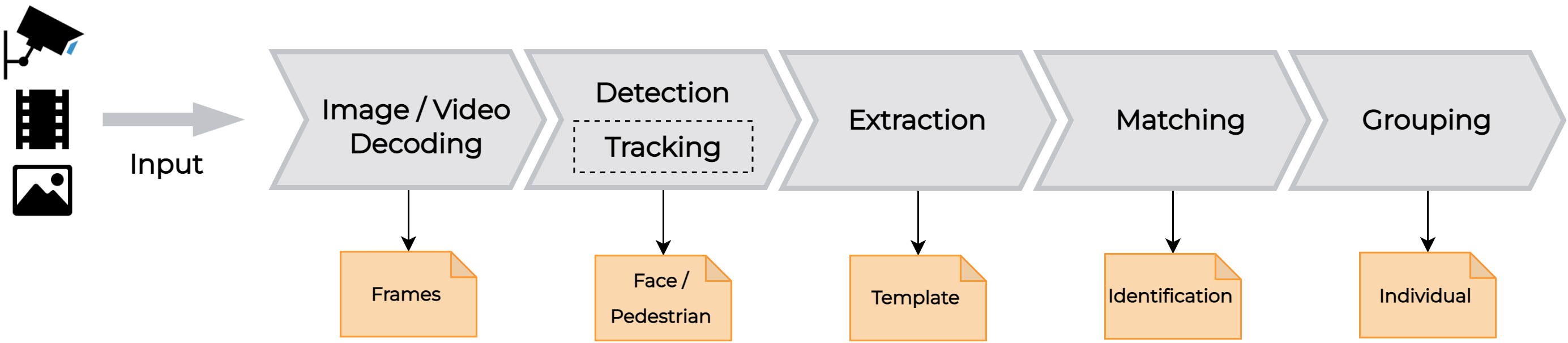

In video processing, a pipeline refers to a sequence of processing steps or stages through which video data flows. Each stage in the pipeline typically performs a specific task or transformation on the video data, and the output of one stage becomes the input for the next.

Live video can be processed conventionally on the server by the SmartFace Platform or on the edge device (camera, AI box) by the SmartFace Embedded Stream Processor.

Irrespective of the chosen method each pipeline contains following steps Video decoding, Detection, Extraction, Matching, Tracking All steps are described deeply in Processing Features chapter.

Server Processing

Server Processing is the default method of how SmartFace processes live video streams. The initial step of the Server Processing pipeline is Video Decoding.

When deployed on Windows the video stream is processed by the FFmpeg, when deployed on Docker (on Linux) then by the GStreamer. Both FFmpeg and GStreamer have the same high level processing pipeline and all steps are performed by the SmartFace Platform on the server side.

Edge Processing

In the context of SmartFace, Edge Processing offers the capability to offload one or more processing steps from the server side to edge devices, which can include cameras or other hardware-accelerated devices. This offloading process delivers several notable advantages:

- Resource Efficiency: Edge Processing significantly reduces the hardware requirements on the server side. By utilizing the capabilities of edge devices, server resources can be allocated more efficiently.

- Improved Speed: Offloading processing steps to edge devices enhances the overall processing speed, enabling quicker results and responses.

- Reduced Latency: SmartFace’s Edge Processing minimizes latency, ensuring that data processing and analytics occur in near real-time.

- Bandwidth Optimization: By shifting processing to edge devices, network bandwidth usage is drastically reduced. This optimization results in a more streamlined and cost-effective data transmission.

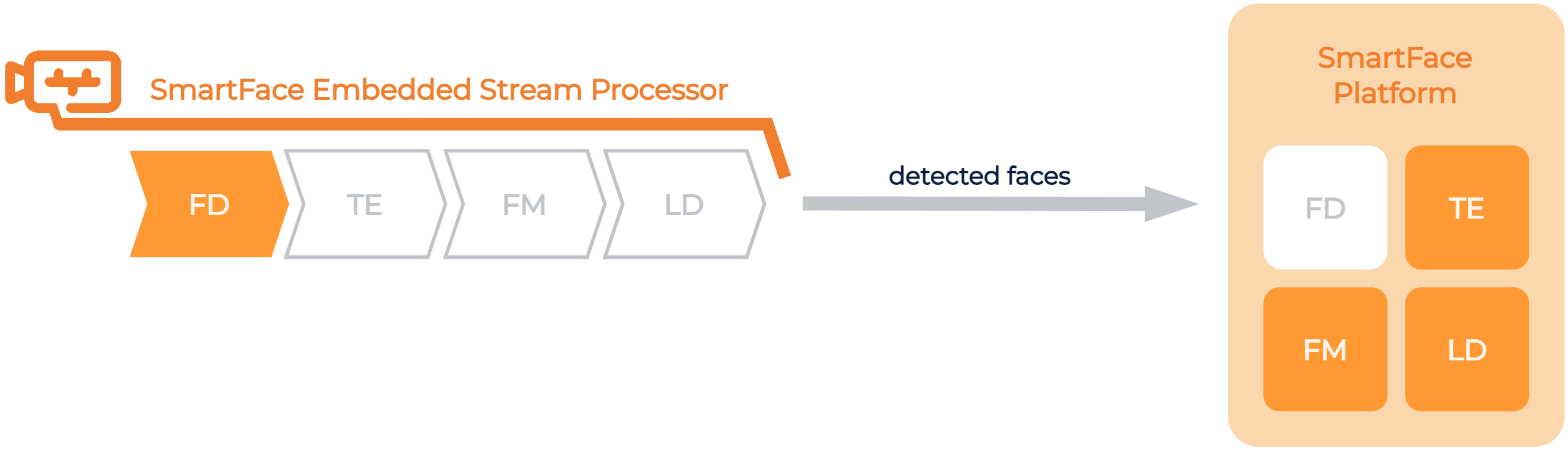

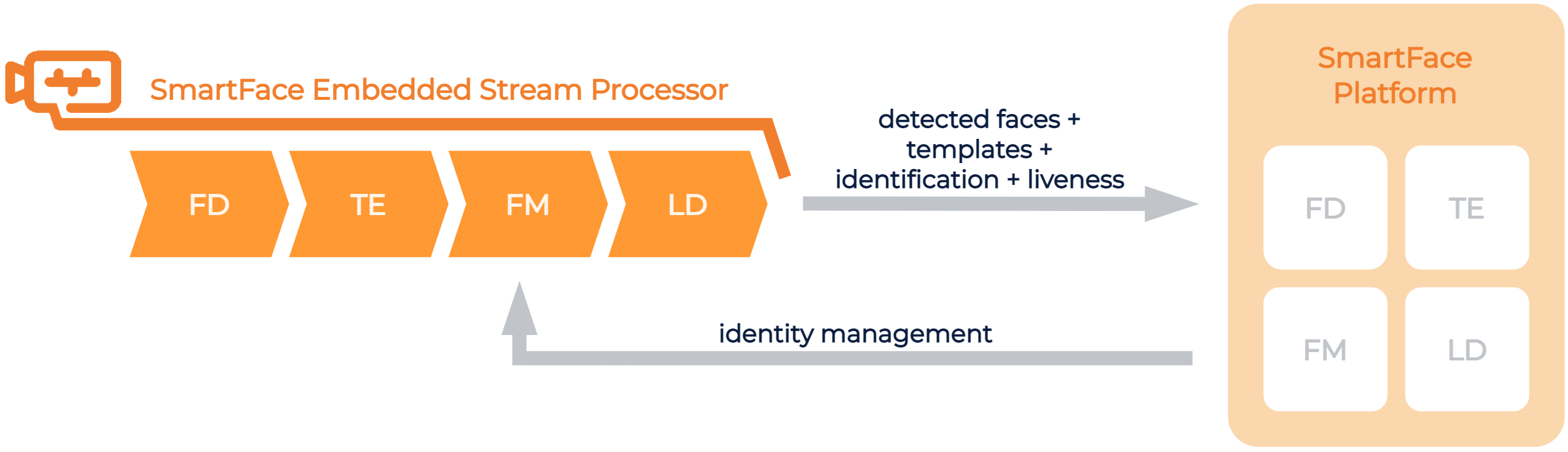

Edge Processing is configurable and can fit hardware capabilities by turning on or off particular pipeline steps.

| high-end edge device | low performing Smart Camera | |

|---|---|---|

| Video Decoding | ✅ | ✅ |

| Detection* | ✅ | ✅ |

| Tracking* | ✅ | ✅ |

| Extraction* | ✅ | ❌ |

| Matching | ✅ | ❌ |

| Liveness | ✅ | ❌ |

*currently only face related operations can be performed only on the edge

Low performing Edge Stream Device (FD - Face Detection, TE - Template Extraction, FM - Face Matching, LD - Liveness Detection)

High-end performing Edge Stream Device (FD - Face Detection, TE - Template Extraction, FM - Face Matching, LD - Liveness Detection)

Configuration

The SmartFace Edge Processing pipeline consists of two parts. The first part runs directly on the Edge and is provided by SmartFace Embedded Stream Processor and the second part runs inside of the SmartFace Platform. They both comunicate via MQTT.

Detailed configuration of SmartFace Embedded Stream Processor is described here.