Features

The face recognition process includes the detection of the face, detection of facial landmarks, face template extraction and 1:1 matching (verification) or 1:N matching (identification).

- Face Detection

- Face Landmarks Extraction

- Face Mask Detection

- Face Template Extraction

- Face Verification

- Face Identification

- Face Liveness Detection

- Face Quality Evaluation

- Iris Detection

- Iris Template Extraction

- Iris Verification

- Iris Identification

Face Detection

Face detection is a process of finding multiple faces in the input image.

SmartFace Embedded can detect faces of various sizes, orientations, facial hair types or ethnicities. The face can be partly occluded by glasses, sunglasses or a hat. IFace SDK can operate with many different lighting conditions and image qualities.

Face Size

Face size is defined as a maximum of values of distance between eye centers and the distance between the center of the mouth and the center point between the eyes (nose root). For more information biometry of the face, please read here.

In SFE Toolkit the minimum and maximum face size comes as input for the sfeFaceDetectInputSize function. This function returns the recommended dimension of the image to be downscaled before face detection.

The face area is closely related to the face size. It is an area around a face defined by a bounding rectangle with width = 4 x face_size, height = width / 0.75 (according to ISO/IEC 19794-5 standard, section 9.2).

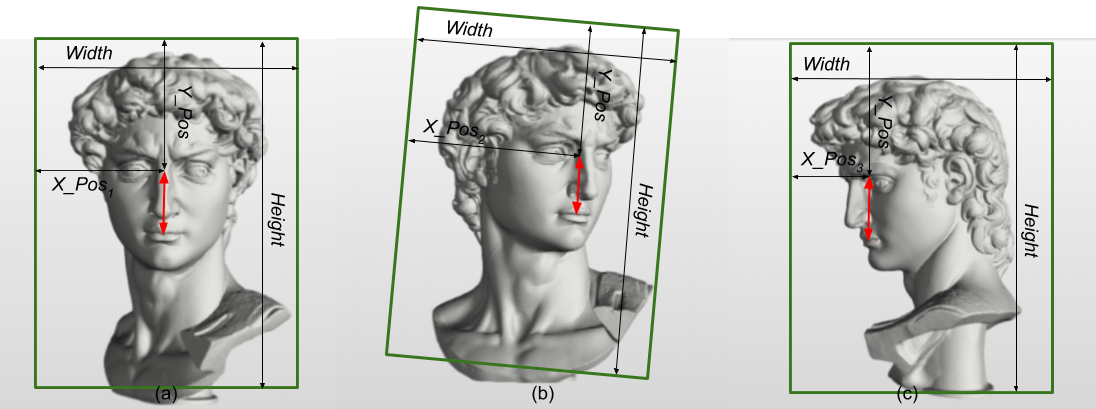

The Point which is the geometric center between eyes is positioned in the face area in position X_Pos, Y_Pos, where Y_Pos = 0.6f * width, and X_Pos relies on the head yaw rotation. The main reason for this is to have the whole head in the face area no matter what the head rotation is.

Face area: visualizations of face areas (green boxes) for the face in various positions. The face size is defined by a distance shown as the red arrow. There are different positions of the face area according to the face yaw rotation shown in (a),(b) and (c). Positions of the eyes' centers in the face areas are the same in the Y direction (Y_Pos) but change in the X direction (X_Pos1, X_Pos2, X_Pos3). Width and Height are the same in all three cases.

Face area size relative to image area size can specify the sizes of faces that should be detected.

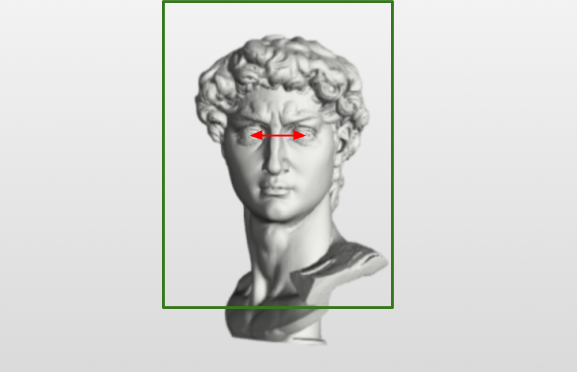

If image above has resolution of 730x470 pixels (image_area_size = 730 * 470 = 343100) and face_size is 70 pixels (face_area_width = 70 * 4 = 280, face_area_height = face_area_width / 0.75 = 373, face_area_size = 104440), then relative image size is relative_image_size = 104440 / 343100 = 0.304 (30.4%). The face area is marked with a green box and the face size with a red arrow.

Sometimes, when faces are close to image boundaries, their face areas can get out of the image boundaries. Some applications may want to find these faces (and filter them out). Due to this reason, the SFE Toolkit provides functionality to return the face area visible in the image.

*Example of face area visible in the image. Relative values: (a) 0.30, (b) 0.5, (c) 1.*In SFE Toolkit use function sfeFaceArea which returns actual face size, face area and face area relative to the image. Find more information in the Face Area section

SmartFace Embedded can detect faces of various rotations.

Possible face rotations (defined as in DIN9300).

Find more information in the Headpose Angles section

SmartFace Embedded has different face detection modes. Using different modes can adjust the trade-off between speed and accuracy of face detection. Face detection accuracy has two meanings:

- a ratio between false accepted faces and false rejected faces,

- the precision of facial feature point detection.

Face detection returns an array of bounding boxes of detected faces with detection confidence. Face detection confidence is a value in the range <0.0, 1.0> and it refers to the confidence score of the face related to face detection. The higher the value of the attribute the better quality of the face. The decision thresholds are around 0.06, but it depends on the face image quality/camera angle etc. Detected faces are ordered using face detection confidence. Face detection confidence defines the order of faces. They are ordered in descending order, so the face with the highest confidence is the first (index 0) in the array.

In SFE Toolkit use the function sfeFaceDetect.

Example

SFEFaceDetect detected_face{};

// Detect face in the image

float threshold = 0.1f;

size_t detection_count = 1;

BENCHMARK("sfeFaceDetect") {

SFEError error =

sfeFaceDetect(detector_solver, resized_image, threshold,

&detected_face, &detection_count);

utils::checkError(error);

REQUIRE(detection_count == 1);

};

std::cout << std::endl;

std::cout << "Face detection confidence: "

<< detected_face.raw_confidence << std::endl;

Recommended input image dimensions

Before face detection, the image size can be adjusted to fit the required face size detection range. This process improves accuracy. The input combination of required maximal and minimal face sizes is validated against the model constraints. If valid, the recommended width and height of the image are calculated. The original image can be resized by using a specific function from sfe_toolkit.

In SFE Toolkit use the function sfeFaceDetectInputSize.

Example

// Solvers without width and height in name can accept dynamic input

// image size For best results we recommend to scale the input image to

// a resolution recommended by the sfeFaceDetectInputSize function

// The accuracy parameter depends on used detection solver

auto accuracy_type =

SFEFaceDetectAccuracyType::SFE_FACE_DETECT_ACCURACY_TYPE_BALANCED;

if (solver_face_detect.find("accurate") != std::string::npos) {

accuracy_type =

SFEFaceDetectAccuracyType::SFE_FACE_DETECT_ACCURACY_TYPE_ACCURATE;

}

// Use sfeFaceDetectInputSize to get recommended input image dimensions

// for given min_face_size/max_face_size

SFEError error = sfeFaceDetectInputSize(

image, accuracy_type, face_size_min, face_size_max,

&recommended_width, &recommended_height);

utils::checkError(error);

Dynamic model input shape

Face detection ONNX models used in the SFE Toolkit support dynamic input shape. It means you can choose any image resolution to pass into face detection. Please note the bigger the input image is the longer the face detection takes.

Based on the resolution of the input image and the required face size to be detected, we can recommend the size of the image for face detection. See more in this section

Static model input shape

Some NN conversion tools and NN inference engines do not support dynamic model input shapes, for example, Rockchip, and Ambarella. In this case, we provide multiple face detection models with the input shape set according to the customer’s need, for example, Full HD (1920x1080), HD (1280x720), 640x360, etc.

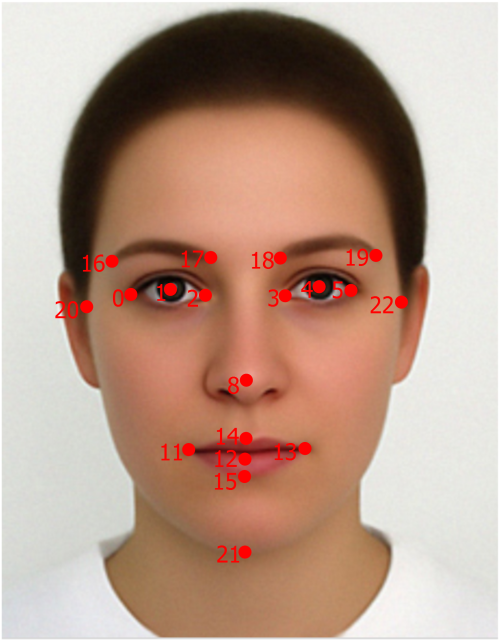

Face Landmarks Extraction

Facial landmarks extraction is a process of detecting 23 landmarks (feature points) in a detected face. These points include the position of the eyes, eyebrows, nose, mouth, chin and edges of the face. Each detected landmark consists of the landmark type, x and y coordinates relative to the input image and confidence. All detected landmarks and their IDs can be seen In the picture below.

git statusFacial landmarks and their IDs detected by SmartFace Embedded.

In SFE Toolkit use the function sfeFaceLandmarks to extract the landmarks from the detected face.

Example

// Use landmarks detection solver to get relevant landmarks for

// the detected face

std::vector<SFEFaceLandmarks> landmarks(

SFE_FACE_LANDMARK_COUNT);

BENCHMARK("sfeFaceLandmarks") {

SFEError error =

sfeFaceLandmarks(landmarks_solver, image,

detected_face.bbox, landmarks.data());

utils::checkError(error);

};

A list of all facial landmarks is defined in the enum SFEFaceLandmarkType. The position of the output facial landmark is always relative to the input image origin. The image origin is pixel[0,0] in the upper left corner. The position of the feature point is returned as a pair of x and y coordinates with float precision. SFEFaceLandmarks includes also the confidence of detected landmarks

Landmarks are used for face mask status detection, normalization of the face before the face template extraction, face liveness detection and other operations.

Face Mask Detection

Face mask detection determines the presence of the face mask on the given face. The presence of the mask is specified using mask confidence which is a value in the range <0.0, 1.0>. The recommended decision threshold is around 0.5.

In SFE Toolkit use the function sfeFaceMaskConfidence.

Example

{ // Optional, get face mask confidence

float mask_confidence;

SFEError error =

sfeFaceMaskConfidence(landmarks.data(), &mask_confidence);

utils::checkError(error);

std::cout << "Face mask confidence: " << mask_confidence

<< std::endl;

}

Face Template Extraction

SmartFace Embedded can be also used for 1:1 (verification) and 1:N (identification) face recognition.

To perform 1:1 or 1:N matching it is necessary to extract a face template. A face template is a feature vector describing the face. The size of the face template is only 522 B so the memory requirements for identification are low and the matching is very fast. SmartFace Embedded supports multiple template extraction modes. Using different modes can adjust the trade-off between face template creation speed and face template quality. Face templates of higher quality give better results when used for 1:1 or 1:N face matching. Templates created with different template extraction modes are not compatible and therefore they cannot be combined in the verification and identification process. retrieved template versions can be compared.

- fast - Face templates suitable for verification of fairly good accuracy are created when the fast mode is used. The performance of face template creation is very fast. Suitable for mobile/embedded devices.

- balanced - Face templates suitable for verification/identification of high accuracy are created when the balanced mode is used. The performance of the face template creation is somewhere in between accurate_mask and fast modes. The balanced model is also capable of template extraction from faces with the face mask present.

- accurate_mask - Face templates suitable for verification/identification of very high accuracy are created when the accurate_mask mode is used. However, the performance of the face template creation is not as good as when balanced or fast mode is used.

You can decide which algorithm to use by choosing the appropriate face template extraction solver.

- face_template_extract_fast

- face_template_extract_balanced

- face_template_extract_accurate_mask

Please see the supported features table to understand which model is supported on your HW.

In SFE Toolkit use the function sfeFaceTemplateExtract. To use the correct template extraction mode, load the appropriate solver:

- face_template_extract_fast

- face_template_extract_balanced

- face_template_extract_accurate_mask

Example

// Use template extraction solver to create new face

// template

SFEFaceTemplate face_template;

BENCHMARK("sfeFaceTemplateExtract") {

SFEError error = sfeFaceTemplateExtract(

template_solver, image, landmarks.data(),

detected_face.raw_confidence, &face_template);

utils::checkError(error);

};

In SFE Stream Processor enable face template extraction by setting the face_extraction.enable=true in settings.yaml.

To use the correct liveness detection mode, set the path to the appropriate solver in solvers.face_extraction in settings.yaml:

- face_template_extract_fast

- face_template_extract_balanced

- face_template_extract_accurate_mask

The face template includes the template version and quality. Both can be obtained using C API.

- Template version is used during matching to make sure the versions of the template being matched are the same, balanced and accurate_mask models produce templates of different versions that cannot be matched. When using multiple SmartFace Embedded Toolkits on multiple platforms or in conjunction with the SmartFace platform and template compatibility is required, you need to make sure the same template extraction model is used on both sides.

- Template quality is a value in the range <0.0, 1.0> and it indicates the suitability of the template for matching. The higher template quality leads to better-matching results.

In SFE Toolkit use functions sfeFaceTemplateVersion and sfeFaceTemplateQuality

Example

SFEFaceTemplateVersion face_template_version;

SFEError error =

sfeFaceTemplateVersion(&face_template, &face_template_version);

utils::checkError(error);

std::cout << std::endl;

std::cout << "Face template version: "

<< face_template_version.version_major << "."

<< face_template_version.version_minor << std::endl;

float face_template_quality;

SFEError error =

sfeFaceTemplateQuality(&face_template, &face_template_quality);

utils::checkError(error);

std::cout << std::endl;

std::cout << "Face template quality: " << face_template_quality

<< std::endl;

Face verification template compatibility

The face template has its system of version numbers. Major number change defines radical changes in the internal structure of the template document. Minor number change defines a change in extraction algorithm or minor data change of template. Newer face templates (extracted with a faster or more accurate algorithm) are not compatible with previous templates.

In SFE Toolkit use the function sfeFaceTemplateVersion.

Face Template Verification

Verification is a comparison of two extracted face templates and it returns a matching score which refers to the probability that two templates are extracted from the face of the same person. When the matching score is higher than a certain score threshold then the face images belong to the same person with high probability. The matching score range is <0.0, 1.0>. Its values can be interpreted as follows:

- Low values of the score, i.e. range <0, 0.6>, are normalized using FAR values and this formula:

score_L=-10*log(FAR)/100. It means that score 0.3 is related to FAR=1:1000=10^-3, and score 0.5 is related to FAR=1:100000=10^-5 (evaluated on our large testing non-matching pairs dataset). - High values of the score, i.e. range <0.8, 1.0>, are normalized using FRR values and this formula:

score_H=100/3*(FRR + 2)/100. It means that a score of 0.8 is related to FRR=0.4, and a score of 0.9 is related to FRR=0.7 (evaluated on our large testing matching pairs dataset). - Scores values in the range (0.6, 0.8) are weighted averages of score_L and score_H. This normalization helps the users to select the score threshold according to their needs. If it is too low e.g. threshold = 0.3, then the chance of falsely accepted non-matching faces is quite high (FAR=10^-3). When it is too high e.g. threshold = 0.9, then the chance of false rejected matching faces is quite high (FRR=0.7).

In SFE Toolkit use the function sfeFaceTemplateMatch.

Example

auto match_template_data =

utils::readFile(template_match);

SFEFaceTemplate *match_template =

reinterpret_cast<SFEFaceTemplate *>(

match_template_data.data());

THEN("match templates") {

float match_confidence;

BENCHMARK("sfeFaceTemplateMatch") {

SFEError error = sfeFaceTemplateMatch(

&face_template, match_template,

&match_confidence);

utils::checkError(error);

};

std::cout << std::endl;

std::cout << "Match confidence: " << match_confidence

<< std::endl;

}

Face Template Identification

Identification is the process of finding the best matching candidates for the probe template in the template gallery (database). The maximum number of best candidates to be returned by the identification function can be configured. It depends on the use case. Identification returns only candidates whose matching score with the probe template is higher or equal to the matching score threshold. For the matching score threshold recommendation please refer to the section Face Template Verification

The identification is pure CPU-based and it can be configured how many CPU cores will be used for identification to optimize the performance within your application and also the CPU utilization.

In SFE Toolkit use the function sfeFaceTemplateIdentify.

Example

// Use gallery template to fill in a gallery of templates

// NOTE: In real use cases the gallery would be filled with various

// templates of different faces

auto gallery_template_data = utils::readFile(template_gallery);

SFEFaceTemplate gallery_template =

*reinterpret_cast<SFEFaceTemplate *>(gallery_template_data.data());

// Place needle template into the gallery

auto needle_template_data = utils::readFile(template_needle);

SFEFaceTemplate needle_template =

*reinterpret_cast<SFEFaceTemplate *>(needle_template_data.data());

// Initialize gallery with a needle inside

std::vector<SFEFaceTemplate> template_gallery(1000, gallery_template);

size_t needle_position = 987;

template_gallery[needle_position] = needle_template;

THEN("identify template") {

float match_threshold = 0.6f;

size_t candidate_count = 1;

std::vector<SFEIdentificationResult> results(candidate_count);

BENCHMARK("sfeFaceTemplateIdentify") {

SFEError error = sfeFaceTemplateIdentify(

&face_template, template_gallery.data(), template_gallery.size(),

match_threshold, results.data(), &candidate_count, 0);

utils::checkError(error);

};

results.resize(candidate_count);

std::cout << std::endl;

std::cout << "Found " << results.size() << " candidates " << std::endl;

for (auto &result : results)

std::cout << "Template index: #" << result.index

<< ", score: " << result.score << std::endl;

REQUIRE(results[0].index == needle_position);

}

In SFE Stream Processor enable identification by setting the face_identification.enable=true in settings.yaml.

See also face_identification configuration to set other identification parameters like threshold, candidate count and path to the storage.

Face Liveness Detection

Face recognition systems are vulnerable to spoof attacks made by non-real faces. It is an easy way to spoof face recognition systems by facial pictures such as portrait photographs, masks and videos which are easily available from social media. A secure system needs a liveness evaluation strategy to guard against such spoofing.

Face liveness detection is a process of recognizing spoof attacks and non-real faces. It can recognize a real face against a photograph, masks and videos. The passive liveness score is defined over the interval <0,1>.

SmartFace Embedded currently supports two modes of passive liveness detection:

- DISTANT - Passive liveness check for access control use-case (walkthrough), where the distance between the face and the camera is bigger and the size of the face (eye distance) is smaller.

- NEARBY - Passive liveness check for digital onboarding use-case (selfie), where the distance between the face and the camera is smaller and the size of the face (eye distance) is bigger.

The specific threshold to evaluate the retrieved liveness score should be set according to your security needs. You should evaluate certain face attributes to achieve reliable accuracy.

Recommended threshold for various passive liveness types

| Distant | Nearby | |

|---|---|---|

| Threshold | 0.842 | 0.913 |

Recommended face attributes

| Face Attribute | Distant | Nearby |

|---|---|---|

| Face confidence | [0.1; 1.0] | [0.1; 1.0] |

| Face size | [30; inf] | [60; inf] |

| Face relative area | [0.009; inf] | [0.25; inf] |

| Face relative area in the image | [0.9; inf] | - |

| Yaw angle | [-20; 20] | [-20; 20] |

| Pitch angle | [-20; 20] | [-20; 20] |

| Brightness | - | [0.11; 0.75] |

| Contrast | - | [0.25; 0.8] |

| Sharpness | [0.6; inf] | [0.7; inf] |

| Unique intesnity level | - | [0.525; 1.0] |

In SFE Toolkit use the function sfeFaceLivenessPassive. To use the correct liveness detection mode, load the appropriate solver:

- face_liveness_passive_distant

- face_liveness_passive_nearby

Example

// Use passive liveness solver to calculate the liveness

float liveness_score{};

BENCHMARK("sfeFaceLivenessPassive") {

SFEError error = sfeFaceLivenessPassive(

liveness_solver, image, landmarks.data(),

&liveness_score);

utils::checkError(error);

};

In SFE Stream Processor enable passive liveness detection by setting the face_liveness_passive.enable=true in settings.yaml.

To use the correct liveness detection mode, set the path to the appropriate solver in solvers.face_liveness_passive in settings.yaml:

- face_liveness_passive_distant

- face_liveness_passive_nearby

Face Quality Evaluation

SmartFace Embedded supports various functions to evaluate face attributes.

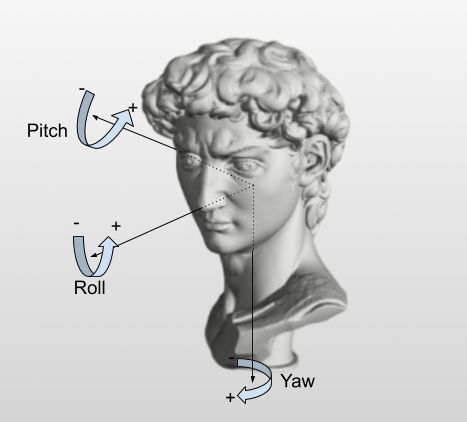

Headpose angles

Face head pose attributes contain angle rotations of the head, specifically yaw, pitch and roll.

- The

rollis a face attribute representing the angular rotation of the head towards the camera reference frame around the Z-axis. - The

yawis a face attribute representing the angular rotation of the head towards the camera reference frame around the Y-axis. - The

pitchis a face attribute representing the angular rotation of the head towards the camera reference frame around the X-axis.

In SFE Toolkit use function sfeFaceHeadpose.

Example

{ // head pose angles

SFEFaceHeadPose head_pose{};

BENCHMARK("sfeFaceHeadPose") {

SFEError error =

sfeFaceHeadPose(image, landmarks.data(), &head_pose);

utils::checkError(error);

};

std::cout << std::endl;

std::cout << "Head pose angles -> roll: " << head_pose.roll

<< ", yaw: " << head_pose.yaw

<< ", pitch: " << head_pose.pitch << std::endl;

}

Face Area

The face area is closely related to the face size. It is an area around a face defined by a bounding rectangle with width = 4 x face_size, height = width / 0.75 (according to ISO/IEC 19794-5 standard, section 9.2). The Point which is the geometric center between eyes is positioned in the face area in position X_Pos, Y_Pos, where Y_Pos = 0.6f * width, and X_Pos relies on the head yaw rotation. The main reason for this is to have the whole head in the face area no matter what the head rotation is.

face relative areais the whole face area (including area exceeding image borders) relative to the image area.face relative area in the imageis the face area within the image borders relative to the image area.

In SFE Toolkit use function sfeFaceArea.

Example

{ // face area

SFEFaceArea area{};

BENCHMARK("sfeFaceArea") {

SFEError error =

sfeFaceArea(image, landmarks.data(), &area);

utils::checkError(error);

};

std::cout << std::endl;

std::cout << "Face area-> size: " << area.size

<< ", area: " << area.area

<< ", area_in_image: " << area.area_in_image

<< std::endl;

}

Face Image Quality Attributes

The image face quality attributes that can be calculated are sharpness, brightness, contrast and unique intensity levels. All attributes are normalized and their value can be from range <0, 1>.

- The

sharpnessis a face attribute for evaluating whether an area of the face image is not blurred. The decision threshold for sharpness is 0.5, values near 0 indicate ‘very blurred’, and values near 0 indicate ‘very sharp’. - The

brightnessis a face attribute for evaluating whether an area of the face is correctly exposed. Values near 0 indicate ‘too dark’, values near 1 indicate ‘too light’, and values around 0 indicate OK. The decision thresholds are around 0.25 and 0.75. - The

contrastis a face attribute for evaluating whether an area of the face is contrast enough. Values near 0 indicate ‘very low contrast’, values near 1 indicate ‘very high contrast’, and values around 0 indicate OK. The decision thresholds are around 0.25 and 0.75. - The

unique intensity levelsrepresent a face attribute for evaluating whether an area of the face has an appropriate number of unique intensity levels. Values near 0 indicate ‘very few unique intensity levels’, and values near 1 indicate ‘enough unique intensity levels’. The decision threshold is around 0.5.

In SFE Toolkit use the function sfeFaceQualityAttributes.

Example

SFEFaceQualityAttributes quality{};

BENCHMARK("sfeFaceQualityAttributes") {

SFEError error = sfeFaceQualityAttributes(

image, landmarks.data(), &quality);

utils::checkError(error);

};

Iris Detection

Iris detection is a process of detecting the iris and pupil in the input eye image.

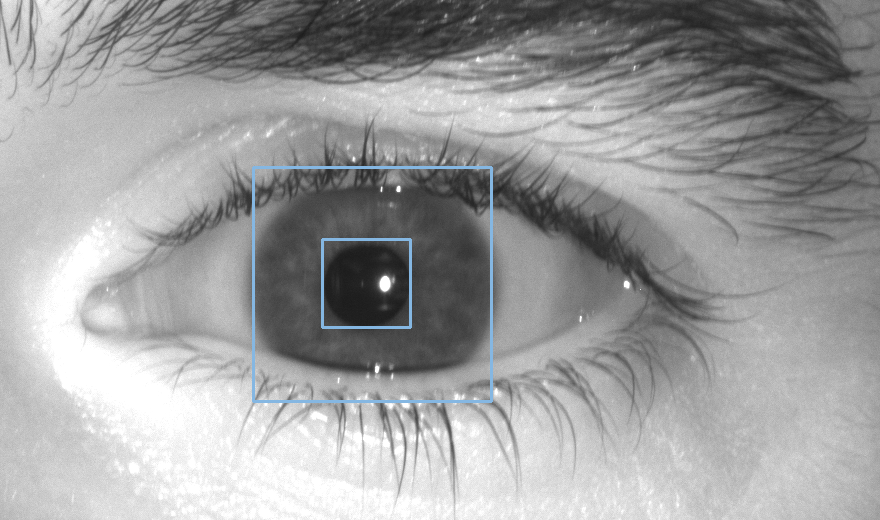

Iris detection result annotated in the input image.

Iris detection returns detected iris as a structure containing the iris bounding box, pupil bounding box, detection confidence and iris keypoints. Iris detection confidence is a value in the range <0.0, 1.0> and it refers to the confidence score of the iris related to iris detection. The iris detection confidence can be used to filter low quality irises from further processing (template extraction and matching). The recommended threshold is 0.7.

In SFE Toolkit use function sfeIrisDetect

Iris Template Extraction

SmartFace Embedded can be also used for 1:1 (verification) and 1:N (identification) iris recognition.

To perform 1:1 or 1:N matching it is necessary to extract an iris template. The iris template is a feature vector describing the iris. The size of the iris template is only 522 B so the memory requirements for identification are low and the matching is very fast.

In SFE Toolkit use function sfeIrisTemplateExtract

The iris template includes the template version and quality. Both can be obtained using C API.

- Template version is used during matching to make sure the versions of the template being matched are the same.

- Template quality is a value in the range <0.0, 1.0> and it indicates the suitability of the template for matching. The higher template quality leads to better-matching results.

In SFE Toolkit use functions sfeIrisTemplateVersion and sfeIrisTemplateQuality

Iris verification template compatibility

The face template has its system of version numbers. Major number change defines radical changes in the internal structure of the template document. Minor number change defines a change in extraction algorithm or minor data change of template. Newer face templates (extracted with a faster or more accurate algorithm) are not compatible with previous templates.

In SFE Toolkit use function sfeIrisTemplateVersion

Iris Template Verification

Verification is a comparison of two extracted iris templates and it returns a matching score which refers to the probability that the two templates are extracted from the iris of the same eye. When the matching score is higher than a certain score threshold then the iris images belong to the same eye with high probability. The matching score range is <0.0, 1.0>. Its values can be interpreted as follows:

- Low values of the score, i.e. range <0, 0.6>, are normalized using FAR values and this formula:

score_L=-10*log(FAR)/100. It means that score 0.3 is related to FAR=1:1000=10^-3, and score 0.5 is related to FAR=1:100000=10^-5 (evaluated on our large testing non-matching pairs dataset). - High values of the score, i.e. range <0.8, 1.0>, are normalized using FRR values and this formula:

score_H=100/3*(FRR + 2)/100. It means that a score of 0.8 is related to FRR=0.4, and a score of 0.9 is related to FRR=0.7 (evaluated on our large testing matching pairs dataset). - Scores values in the range (0.6, 0.8) are weighted averages of score_L and score_H. This normalization helps the users to select the score threshold according to their needs. If it is too low e.g. threshold = 0.3, then the chance of falsely accepted non-matching irises is quite high (FAR=10^-3). When it is too high e.g. threshold = 0.9, then the chance of false rejected matching irises is quite high (FRR=0.7).

In SFE Toolkit use function sfeIrisTemplateMatch

Iris Template Identification

Identification is the process of finding the best matching candidates for the probe template in the template gallery (database). The maximum number of best candidates to be returned by the identification function can be configured. It depends on the use case. Identification returns only candidates whose matching score with the probe template is higher or equal to the matching score threshold. For the matching score threshold recommendation please refer to the section Iris Template Verification

The identification is pure CPU-based and it can be configured how many CPU cores will be used for identification to optimize the performance within your application and also the CPU utilization.

In SFE Toolkit use function sfeIrisTemplateIdentify

SFE Toolkit also supports the identification of Entities. Entity ID is used to group multiple iris templates of the same eye. The result of identification is a list of Entity IDs ordered by best matching score of iris templates associated with the same Entity ID.

In SFE Toolkit use function sfeIrisEntityIdentify

Supported Platforms and Features

Face modality

| Solver | Face detect | Face landmarks | Face template extract | Face passive liveness |

|---|---|---|---|---|

| ONNXRT | accurate_mask | 0.25, 0.50 | fast, balanced, accurate_mask | distant, nearby |

| Rockchip RKNPU | accurate_mask | 0.25 | accurate_mask | distant |

| Rockchip RKNPU2 | accurate_mask | 0.25 | accurate_mask | distant |

| Ambarella | accurate_mask | 0.25 | balanced, accurate_mask | distant, nearby |

| Hailo | accurate_mask | 0.25 | accurate_mask | - |

| NXP iMX8 | accurate_mask | 0.25 , 0.50 | balanced, accurate_mask | - |

Iris modality

| Solver | Iris detect | Iris template extract |

|---|---|---|

| ONNXRT | yes | yes |

| Rockchip RKNPU2 | yes | yes |

Input Image Data

SmartFace Embedded can detect and recognize features of faces in images. The is formatted as a raw color (3 channels) image. The image data structure is an array of bytes (unsigned char) where each pixel is formed by 3 bytes, which means 3 color channels ordered in BGR order. The origin of the image is in the upper-left corner. The size of the image data array can be calculated as image height * image width * 3 bytes. SFE Toolkit provides functionality sfeImageLoad for loading of most common image formats (JPEG, PNG, BMP, … ) into an appropriate format suitable for SmartFace Embedded processing SFEImage.

Example

SFEImage image{};

// Load image data from file

auto image_data = utils::readFile(image_detection);

// Decode image from data

SFEError error = sfeImageLoad(image_data.data(), image_data.size(), &image);

utils::checkError(error);