Messaging

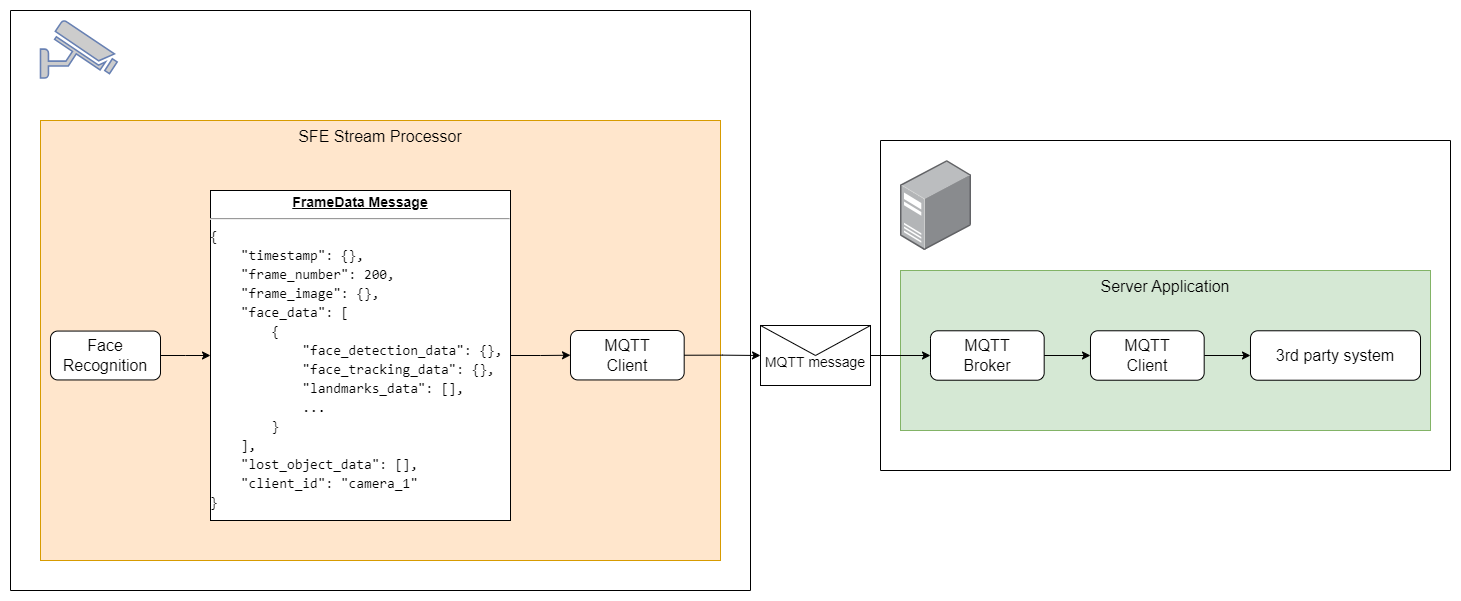

SmartFace Embedded Stream Processor supports two-way communication between the edge device and server applications.

Stream processor uses MQTT protocol to send face recognition results and metadata (FrameData) from edge device to your local or server application. If you don’t want to use MQTT, we support sending the FrameData also using Websocket protocol.

The format of the FrameData message with an example message can be found below:

SFE Stream Processor Frame Data via MQTT

This part of the documentation describes the MQTT topic used by the SmartFace Embedded Stream Processor for sending the FrameData.

Using MQTT for communication with the camera requires the MQTT broker and MQTT Client to run as part of your solution.

SmartFace Platform is already integrated with SFE Stream Processor using MQTT.

Root topic

All topics are prefixed with the root topic (:settings.connection.topic) and client ID (:settings.connection.client_id) which are configured in the settings file.

FrameData Topic

:topic/:client_id/frame_data

=> Periodic(FrameData)

FrameData topic is currently the primary output topic of the stream processor. It is used to periodically send the frame data to the MQTT broker. Depending on the configuration, the frame data can contain the following information:

- Face detection bounding boxes

- Face landmarks

- Face crops

- Face templates

- Face identification results

- Face liveness detection results

- Active tracking ids

- New tracking ids

- Lost tracking ids

- Full frame data

See also the frame_data proto message format

FrameData Message Format

SFE Stream Processor supports sending FrameData messages in the following formats:

- Protobuf (default),

- Json,

- Yaml

To change the format of the message you need to change messaging.format in the configuration. The content of the message depends on SFE Stream Processor Configuration See also an example FrameData message See the frame_data protobuf message

FrameData message example (Json)

{

"timestamp": {

"seconds": 1688110510,

"nanos": 440625640

},

"frame_number": 200,

"frame_image": {

"width": 1280,

"height": 720,

"data": null,

"image_format": 0

},

"face_data": [

{

"face_detection_data": {

"bounding_box": {

"x": 0.61946577,

"y": 0.3427477,

"width": 0.13254198,

"height": 0.30173743

},

"detection_confidence": 0.24703383,

"raw_detection_confidence": 0.99232244

},

"face_tracking_data": {

"tracking_id": 3,

"tracking_uuid": "c8052a96-8c9d-4765-be94-1d6282cf4b32",

"tracking_state": 1

},

"landmarks_data": [

{

"keypoint_type": 0,

"confidence": 6555.97,

"x": 0.6450057,

"y": 0.45749876

},

{

"keypoint_type": 1,

"confidence": 7688.9907,

"x": 0.655019,

"y": 0.45629612

},

{

"keypoint_type": 2,

"confidence": 8230.616,

"x": 0.66622674,

"y": 0.4607573

},

{

"keypoint_type": 3,

"confidence": 8671.502,

"x": 0.69593364,

"y": 0.46342638

},

{

"keypoint_type": 4,

"confidence": 9183.3125,

"x": 0.7068537,

"y": 0.4600336

},

{

"keypoint_type": 5,

"confidence": 9303.206,

"x": 0.71774375,

"y": 0.46332029

},

{

"keypoint_type": 6,

"confidence": 9617.738,

"x": 0.68052393,

"y": 0.46196705

},

{

"keypoint_type": 7,

"confidence": 8992.809,

"x": 0.666,

"y": 0.5363946

},

{

"keypoint_type": 8,

"confidence": 9485.234,

"x": 0.6776546,

"y": 0.53633755

},

{

"keypoint_type": 9,

"confidence": 9714.433,

"x": 0.6920333,

"y": 0.53950506

},

{

"keypoint_type": 10,

"confidence": 8822.452,

"x": 0.6788875,

"y": 0.54646987

},

{

"keypoint_type": 11,

"confidence": 8965.842,

"x": 0.66079986,

"y": 0.56907797

},

{

"keypoint_type": 12,

"confidence": 9792.486,

"x": 0.679145,

"y": 0.5778152

},

{

"keypoint_type": 13,

"confidence": 9667.067,

"x": 0.6996888,

"y": 0.57404685

},

{

"keypoint_type": 14,

"confidence": 9861.519,

"x": 0.67930084,

"y": 0.56860363

},

{

"keypoint_type": 15,

"confidence": 9939.953,

"x": 0.679856,

"y": 0.59032

},

{

"keypoint_type": 16,

"confidence": 7141.656,

"x": 0.63856435,

"y": 0.43423924

},

{

"keypoint_type": 17,

"confidence": 8929.125,

"x": 0.66690445,

"y": 0.43565476

},

{

"keypoint_type": 18,

"confidence": 9254.477,

"x": 0.6952186,

"y": 0.43642095

},

{

"keypoint_type": 19,

"confidence": 8736.874,

"x": 0.72563595,

"y": 0.4419014

},

{

"keypoint_type": 20,

"confidence": 6720.5347,

"x": 0.6298149,

"y": 0.46593603

},

{

"keypoint_type": 21,

"confidence": 9549.333,

"x": 0.67872494,

"y": 0.641848

},

{

"keypoint_type": 22,

"confidence": 7431.887,

"x": 0.7382992,

"y": 0.47668388

}

],

"face_mask_data": {

"confidence": -3390.0

},

"cropping_data": {

"crop_extension": 2,

"crop_box": {

"x": 0.5211221,

"y": 0.23136452,

"width": 0.31893653,

"height": 0.56699824

},

"crop_image": {

"width": 250,

"height": 250,

"data": "...",

"image_format": 0

}

},

"liveness_data": [

{

"liveness_type": 1,

"score": 0.7390711,

"raw_score": 0.256,

"liveness_conditions": [],

"codnitions_met": false

}

],

"template_data": {

"template": "SUNGADFY/PwKA/8G9P71AAQD+ggJ8wH6CAIGBf4DB/oD/vn+CfwCBfgADgcNAf4ABAf8CP4L+Pz0+QYGCAkH/gIC//sKAwIEAwEDBAID+v4DDAcFAwUMAPcBCgAAAfoLBwQBAgMB/P74AQcA/wAACQL8APMFBf8BAwIGAgH9Bv3++wP9AfoB9vr+/AYD+gYEAP0CAwgH9/v4AgECAQEHAQQCAAD+BQLzC/wD/v0L/QH7/wsA/gX9//gH9v38Av8HAADzAAYA/P78APz9Cf8EBgEBAPgBAAgBAwUDAvYQAP/7+vj4AgAE/wD5Bf8C/gH8+g74A/oIBf78BfoFAfwHD/T++gIB/Pr+AAUDBQMLAAD6C/8EBv8F/An+Av/9+AL+Awn3Awn4AQT5Av79B/8F/fnzAfb8+gL+/QUI//35/wf6/P//DwID/gMFC/38+gUCAQAAAwAF/AML8/0KCvf6/f8B+/7++QALAgj6AQUL+wL///n/BAH/CAT8/AIE/gcI+f0BAP77/wMI/gACB/r2AgoIAgb09/8C+f4C/Pf4Bf0CAvgBAP/7A/cAAP4HBwH8/vv7/voA/v75AAD7BvsCBgH5Av8ECgID/AT0AwT7+QAFBPn/9AsEBgQEAQQE/P3zBPz9A/r5AP0D/gP+/gYPBP77Avf69woECQH+A/r5EgQF/gH6AQZTBnP/"

},

"identification_data": [

{

"id": 0,

"uuid": "b8f21151-0c8e-4c6a-8f29-882b405ff9aa",

"score": 0.41636264,

"dsid": "GEN_ID_dbd738aa-cb94-4766-89d4-a2dfd79ffc0c||2",

"meta_data": []

}

],

"identification": true

}

],

"lost_object_data": [],

"client_id": "dev"

}

Protobuf Messages

SFE Stream Processor protobuf messages are documented below:

- innovatrics.embedded.stream_processor.frame_data

- innovatrics.embedded.stream_processor.common

- innovatrics.embedded.stream_processor.health

- innovatrics.embedded.stream_processor.db

- innovatrics.embedded.stream_processor.user

frame_data

You can find the innovatrics.embedded.stream_processor.frame_data protobuf message structure below.

innovatrics.embedded.stream_processor.frame_data imports also common.proto

syntax = "proto3";

package innovatrics.embedded.stream_processor.frame_data;

import "google/protobuf/timestamp.proto";

import "innovatrics/embedded/stream_processor/common.proto";

message FrameData {

// timestamp of the frame the message was generated for

google.protobuf.Timestamp timestamp = 1;

// incremental frame number

uint64 frame_number = 2;

// full frame image

innovatrics.embedded.stream_processor.common.Image frame_image = 3;

// vector of detected faces

repeated FaceData face_data = 4;

// vector of lost objects

repeated LostObjectData lost_object_data = 5;

// client_id (same as MQTT client ID) of the source camera/device which produced the message

string client_id = 6;

}

message FaceData {

// face detection data

FaceDetectionData face_detection_data = 1;

// face tracking data

TrackingData face_tracking_data = 2;

// face landmarks data

repeated FaceLandmarkData landmarks_data = 3;

// face mask status data

optional FaceMaskData face_mask_data = 4;

// cropping data

optional CroppingData cropping_data = 5;

// liveness detection data

repeated LivenessData liveness_data = 6;

// face template extraction

optional innovatrics.embedded.stream_processor.common.TemplateData template_data = 7;

// face identification

repeated IdentificationData identification_data = 8;

// face ifentification was performed

bool identification = 9;

}

message FaceDetectionData {

// bounding box of detected face

innovatrics.embedded.stream_processor.common.BoundingBox bounding_box = 1;

// normalized value detection confidence of detected face - range <0,1>

float detection_confidence = 2;

// raw value of detection confidence of detected face - range <0,1>

float raw_detection_confidence = 3;

}

message TrackingData {

// tracking ID (ByteTrack) assigned to detected object

uint32 tracking_id = 1;

// tracking UUID assigned to detected object

string tracking_uuid = 2;

// tracking state (ByteTrack) of detected object

TrackingState tracking_state = 3;

}

message FaceLandmarkData {

// type of the keypoint detected in the face

FaceKeypointType keypoint_type = 1;

// confidence of the keypoint detected in the face - range <0,1>

float confidence = 2;

// X coordinate of the keypoint relative to full frame - range <0,1>

float x = 3;

// Y coordinate of the keypoint relative to full frame - range <0,1>

float y = 4;

}

message FaceMaskData {

// face mask detection confidence - range <0,1>

float confidence = 1;

}

message CroppingData {

// extension of the crop image specified in settings

uint32 crop_extension = 1;

// bounding box of the crop

optional innovatrics.embedded.stream_processor.common.BoundingBox crop_box = 2;

// crop image of detected object

optional innovatrics.embedded.stream_processor.common.Image crop_image = 3;

}

message IdentificationData {

// entity id, deprecated

uint32 id = 1;

// uuid - unique 36 character string (32 digits separated by hyphens)

string uuid = 2;

// match score

float score = 3;

// DSID

string dsid = 4;

// User metadata

repeated innovatrics.embedded.stream_processor.common.MetaData meta_data = 5;

}

// tracking state enumeration (defined by ByteTrack)

enum TrackingState {

New = 0;

Tracked = 1;

Lost = 2;

Removed = 3;

reserved 4 to max;

}

// face keypoint type enumeration

enum FaceKeypointType {

RightEyeOuterCorner = 0;

RightEyeCentre = 1;

RightEyeInnerCorner = 2;

LeftEyeInnerCorner = 3;

LeftEyeCentre = 4;

LeftEyeOuterCorner = 5;

NoseRoot = 6;

NoseRightBottom = 7;

NoseTip = 8;

NoseLeftBottom = 9;

NoseBottom = 10;

MouthRightCorner = 11;

MouthCenter = 12;

MouthLeftCorner = 13;

MouthUpperEdge = 14;

MouthLowerEdge = 15;

RightEyebrowOuterEnd = 16;

RightEyebrowInnerEnd = 17;

LeftEyebrowInnerEnd = 18;

LeftEyebrowOuterEnd = 19;

RightEdge = 20;

ChinTip = 21;

LeftEdge = 22;

reserved 23 to max;

}

// Liveness data

message LivenessData {

// type of liveness detection algorithm

LivenessType liveness_type = 1;

// liveness detection score, default 0

float score = 2;

// liveness detection raw score, default 0

float raw_score = 3;

// liveness conditions and thresholds

repeated Condition liveness_conditions = 4;

// liveness conditions were met, default false

bool conditions_met = 5;

}

// liveness detection algorithm enumeration

enum LivenessType {

// Passive liveness calculated from distant solver

PassiveDistant = 0;

// Passive liveness calculated from nearby solver

PassiveNearby = 1;

reserved 2 to max;

}

// condition type enumeration

enum ConditionType {

FaceSize = 0;

FaceRelativeArea = 1;

FaceRelativeAreaInImage = 2;

YawAngle = 3;

PitchAngle = 4;

RollAngle = 5;

Sharpness = 6;

Brightness = 7;

Contrast = 8;

reserved 9 to max;

}

message Condition {

// condition type

ConditionType condition_type = 1;

// raw value of the condition

float value = 2;

// lower threshold

optional float lower_threshold = 4;

// optional upper threshold

optional float upper_threshold = 5;

// tag if conditions for liveness evaluation were met

bool condition_met = 6;

}

message LostObjectData {

// tracking UUID of the lost object

string tracking_uuid = 1;

// timestamp of the frame where the object appeared first time (New in ByteTrack)

google.protobuf.Timestamp first_time_appeared = 2;

// timestamp of the frame where ByteTrack change the tracking status of the object to Removed

google.protobuf.Timestamp last_time_appeared = 3;

// crop of best appearance of the object in tracklet - NOT SUPPORTED YET

optional innovatrics.embedded.stream_processor.common.Image best_crop_image = 4;

}

common

You can find the innovatrics.embedded.stream_processor.common protobuf message structure below.

syntax = "proto3";

package innovatrics.embedded.stream_processor.common;

/// Template data

message TemplateData {

bytes template = 1;

}

/// Image message

message Image {

// width of the image

uint32 width = 1;

// height of the image

uint32 height = 2;

// image data in specified format

optional bytes data = 3;

// format of the image data specified in settings

ImageFormat image_format = 4;

}

// Image format

enum ImageFormat {

// default

Raw = 0;

// Jpeg

Jpeg = 1;

// Png

Png = 2;

reserved 3 to max;

}

// Error message

message Error {

// error message

string message = 1;

}

// Normalized bounding box

message BoundingBox {

// X coordinate of left upper corner of bounding box relative to full frame - range <0,1>

float x = 1;

// Y coordinate of left upper corner of bounding box relative to full frame - range <0,1>

float y = 2;

// width of bounding box relative to width of full frame - range <0,1>

float width = 3;

// height of bounding box relative to height of full frame - range <0,1>

float height = 4;

}

// String meta data

message MetaData {

string key = 1;

string value = 2;

}

health

You can find the innovatrics.embedded.stream_processor.health protobuf message structure below.

innovatrics.embedded.stream_processor.health imports also common.proto

syntax = "proto3";

package innovatrics.embedded.stream_processor.health;

// Used for reporting a status of stream processor

message HealthReport {

// Stream processor version

string version = 1;

// Status of the stream processor

HealthStatus status = 2;

}

// Possible statuses of stream processor

enum HealthStatus {

// Indicates stream processor is online

Online = 0;

// Indicates stream processor is offline

Offline = 1;

// Indicates stream processor has disconnected

OfflineDisconnected = 2;

reserved 3 to max;

}

db

You can find the innovatrics.embedded.stream_processor.db protobuf message structure below.

innovatrics.embedded.stream_processor.db.proto imports also common.proto

syntax = "proto3";

package innovatrics.embedded.stream_processor.db;

import "innovatrics/embedded/stream_processor/common.proto";

// Request database update

message UpdateRequest {

// From DSID

string from_dsid = 1;

// To DSID

string to_dsid = 2;

// Failure DSID

string failure_dsid = 3;

// Clear database flag

bool clear = 4;

// Update / insert

repeated MemberUpsert updates = 5;

// Delete

repeated MemberDelete deletes = 6;

}

// Set or update user

message MemberUpsert {

// Member info

MemberInfo member_info = 1;

// Templates

repeated innovatrics.embedded.stream_processor.common.TemplateData templates = 2;

// Member metadata

repeated innovatrics.embedded.stream_processor.common.MetaData meta_data = 3;

}

// Users to delete

message MemberDelete {

// Member info

MemberInfo member_info = 1;

}

// Member info used to identify user

message MemberInfo {

// uuid - unique 36 character string (32 digits separated by hyphens)

string uuid = 1;

}

// Update response message

message UpdateResponse {

// DSID

string current_dsid = 1;

// Error message in case of an error

optional string error_message = 2;

}

// Request for status

message StatusRequest {}

// Status response message

message StatusResponse {

// DSID

string current_dsid = 1;

// Error message in case of an error

optional string error_message = 2;

}

user

You can find the innovatrics.embedded.stream_processor.user protobuf message structure below.

innovatrics.embedded.stream_processor.user imports also common.proto

syntax = "proto3";

package innovatrics.embedded.stream_processor.user;

import "innovatrics/embedded/stream_processor/common.proto";

// Record stored in database

message Record {

// Record UUID

string uuid = 1;

// Face templates

repeated innovatrics.embedded.stream_processor.common.TemplateData templates = 2;

// Metadata

repeated innovatrics.embedded.stream_processor.common.MetaData metadata = 3;

}

// A collection of Records

message Records {

// Records

repeated Record records = 1;

}