Performance measurements

The performance of Digital Identity Service has been measured on AWS platform to assist with infrastructure planning, focusing on the exhaustive identity verification scenario. All testing images were generated by Mobile and Web components.

Evaluation

Identity verification process:

- Upload selfie

- Check passive liveness on selfie

- Upload and OCR two sides of Slovakia national ID card

- Get customer & Inspect customer & Inspect customer document requests

- Get document front & back page

- Delete customer

Total of 750 full identity verification processes were evaluated. With 3 concurrent threads, the throughput reached 0.48 verifications per second.

| Operation | Median [ms] | Average [ms] | 95% Line |

|---|---|---|---|

| Create customer | 16.00 | 17.74 | 22.00 |

| Provide customer selfie | 145.00 | 151.38 | 214.05 |

| Create liveness | 10.00 | 11.08 | 15.05 |

| Passive liveness selfie with link | 26.00 | 27.04 | 34.00 |

| Evaluate passive liveness | 460.50 | 475.60 | 590.55 |

| Create document | 11.00 | 11.66 | 15.00 |

| Create document front page | 1904.00 | 1869.82 | 2269.20 |

| Create document back page | 2097.00 | 2073.40 | 2602.20 |

| Inspect document | 309.00 | 329.41 | 570.80 |

| Inspect customer | 666.00 | 680.94 | 900.20 |

| Get customer | 15.00 | 15.93 | 21.10 |

| Get document front page | 58.00 | 62.02 | 83.00 |

| Get document back page | 60.00 | 63.69 | 87.00 |

| Delete customer | 13.00 | 13.82 | 19.15 |

| Identity verification scenario | 5882.50 | 5803.40 | 6776.60 |

Upon evaluation, the CPU utilization of DIS peaked at approximately 85%, with consistent memory usage.

NOTE: Please note that the aforementioned performance was measured on a minimal requirements configuration and your results may be improved by using a machine with more CPU cores or horizontally scaling the application to achieve your desired results.

Configuration

Digital Identity Service

- Version: 1.53.0

- Deployment: DIS is running as a Docker container deployed on an AWS machine with resources equivalent to an AWS c6a.2xlarge instance.

The server is using the default application configuration with SSE and AVX optimization enabled.

The Docker image is built using Dockerfile provided in the distribution package.

Redis

- Version: 7.1.0

- Deployment: AWS Elasticache cluster with one cache.m6g.large node.

Testing Tool - Jmeter

- Version: 5.5

- Deployment: Jmeter is running as a Docker container deployed on an AWS machine with resources equivalent to an AWS c6a.xlarge instance.

Testing Setup

The setup involved deploying a single instance of DIS, which was connected to a Redis cluster running on a separate machine. The testing client was deployed as a single instance generating requests across multiple threads. All services were deployed within the same region on the Amazon AWS platform to mitigate network latency.

Scaling the infrastructure to the estimated number of transaction requests

Example Use Case

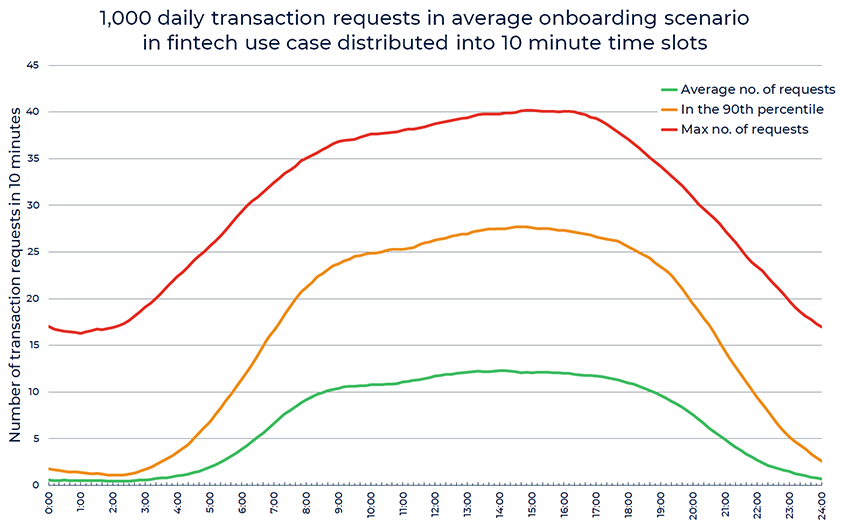

The distribution of the user requests generating server transaction has been measured across multiple installation in the fintech use case in European countries. This reflects behavior of certain population for certain use case and cannot be generalized for all use cases. Integrators of DIS are strongly encouraged to do their measurements for their use case.

A hypothetical daily request for 1000 transactions applying this behavior could be split into 10 minute slots across an average working day. The distribution can be seen on the next chart:

It can be seen that during day hours, there would be in average less than 15 requests per 10 minutes, meaning any machine would by idling most of the time if only 1000 transactions are done daily.

The peak of the requests is around 40 per 10 minutes. These may come, off course, in a short burst. It is up to the desired latency of transaction response, if a throughput of 0.5 or 1 req/sec would be needed to handle such burst.